Let me set the stage. It’s Black Friday, and our e-commerce platform is humming along, soaking in the adrenaline of record-breaking traffic. Dashboards were glowing, engineers cautiously sipping their coffee, trying not to jinx the magic. For a moment, it felt like we were invincible.

Then, as if on cue, disaster struck. The error rate graph took a nosedive off a cliff. Orders froze mid-flight. Twitter, the unkindest of mirrors, erupted with complaints. “What now?” Ravi, my perpetually skeptical colleague, asked. His voice carried the weariness of someone who’d seen too many systems fail spectacularly.

The post-mortem wasn’t pretty. A cascading failure in an external payment gateway had rippled through our system. The result? A glorified pile-up of transactions waiting for a miracle. “How did we miss this?” someone muttered, and for once, no one had an answer.

“Because,” I finally said, “we keep assuming the universe plays fair. Clearly, it doesn’t.”

Enter chaos engineering — the art of deliberately creating disaster to build stronger systems. I’d read about Netflix’s Chaos Monkey, a tool designed to randomly kill servers in production, and I couldn’t help but admire the audacity. What if we could turn our system into a fighter — one that could take a punch and still come out swinging?

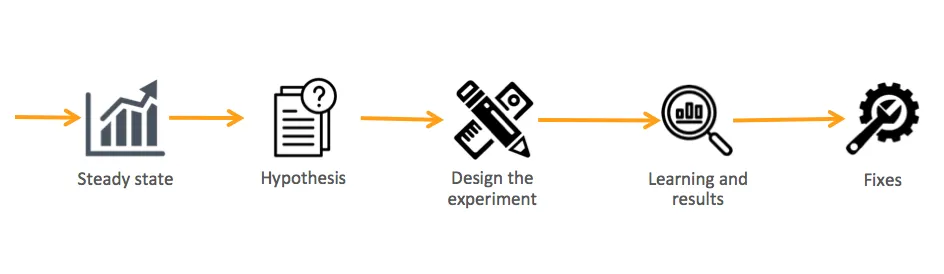

The heart of chaos engineering lies in controlled chaos. It’s not just about breaking things; it’s about breaking them with intent. The concept itself is straightforward: you simulate failures in a controlled environment and observe how your system behaves. But here’s the catch — it’s a lot harder than it sounds.

“So, what? We just… start breaking stuff?” Ravi asked when I pitched the idea.

“Exactly,” I said, grinning. “But, you know, scientifically.”

We started with a replica of our production environment — a Kubernetes cluster spinning away like a miniature universe. If Kubernetes is new to you, think of it as an orchestration platform for containers (tiny, self-sufficient environments where your apps run). Within this cluster, we created our sandbox: pods, namespaces, and service meshes ready to face the chaos.

To introduce the madness, I picked Chaos Mesh — a tool that specializes in orchestrating controlled experiments in Kubernetes. It works by defining chaos scenarios in YAML files.

Our first experiment was simple: inject 500ms latency into the payment gateway service. The goal? Test whether our retry mechanisms and circuit breakers would hold up. Here’s how it looked in code:

apiVersion: chaos-mesh.org/v1alpha1

kind: NetworkChaos

metadata:

name: payment-gateway-latency

spec:

action: delay

mode: one

selector:

labelSelectors:

"app": "payment-gateway"

delay:

latency: "500ms"

duration: "30s"

When I hit deploy, Ravi leaned in. “So, what happens if this crashes everything?”

“Then we fix it,” I said. “And I owe you lunch.”

The system initially seemed fine. Hystrix, our circuit breaker library, kicked in like a pro, cutting off failing requests. But then… the unexpected happened. Our retry logic went berserk, spawning an avalanche of redundant requests. Instead of handling the delay gracefully, we’d created a self-inflicted DDoS (Distributed Denial-of-Service). Ravi just raised an eyebrow and said, “You’re really bad at keeping lunch money.”

Fixing the fallout was a journey of discovery. First, we revamped our retry logic with exponential backoff — a strategy that makes each retry wait progressively longer, like a kid testing a parent’s patience. Then, we fine-tuned our load balancer to handle spikes more gracefully. Finally, we expanded our observability stack, adding metrics for retries, circuit breaker activity, and overall system health.

By the time we ran the experiment again, the system behaved like a seasoned boxer. Latency? No problem. The retries worked without creating chaos, circuit breakers stepped in where needed, and the load balancer handled the traffic without breaking a sweat. Ravi even bought me lunch — though he made me pick the cheapest place in town.

Chaos engineering taught me more than I expected. It’s not just a technical exercise; it’s a mindset. It’s about questioning assumptions, confronting fears, and embracing failure as a teacher. We integrated chaos experiments into our CI/CD pipeline, turning them into regular tests. Post-mortems became celebrations of what we’d learned, rather than finger-pointing sessions. And our systems? Stronger than ever.

But chaos engineering isn’t just about the tech. It’s about the culture you build around it. It’s about teaching your team to think like detectives, to dig into logs and metrics with curiosity instead of dread. It’s about laughing at the absurdity of breaking things on purpose and marveling at how much you learn when you do.

So here’s my challenge to you: embrace the chaos. Whether you’re running a small app or a massive platform, the principles hold true. Build your gladiator arena, unleash the chaos, and watch your system evolve. It’s scary, sure. But it’s also exhilarating. Because resilience isn’t something you stumble into — it’s something you engineer, one glorious failure at a time.